LAINTEXT,EXTERNAL

LAINTEXT,EXTERNAL LAINTEXT,PLAINTEXT

LAINTEXT,PLAINTEXT LAINTEXT

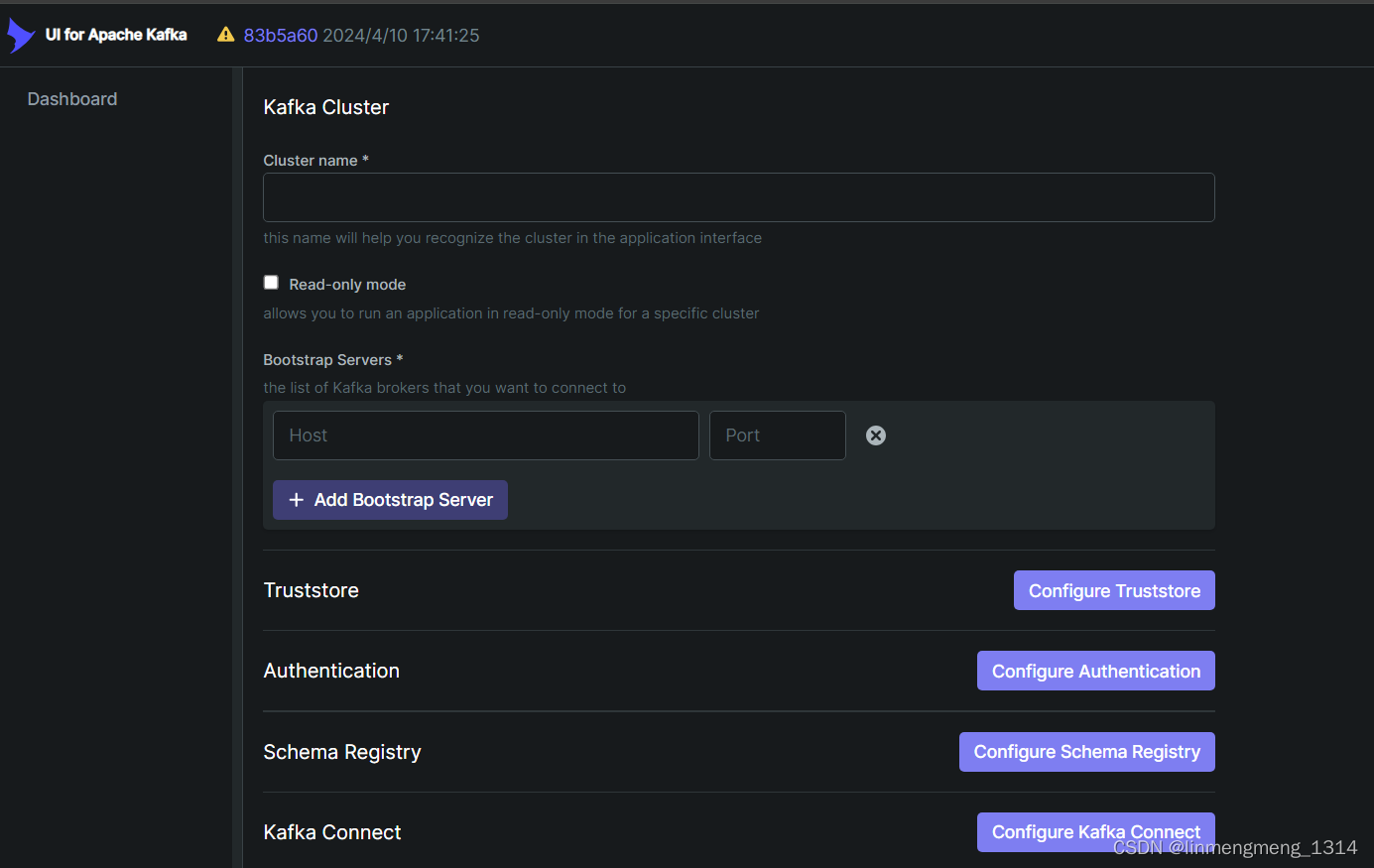

LAINTEXT这里配置了三个监听器连接方式:

- 第一组是明文端口9092,可以利用服务名kafka进行访问;

- 第二组是集群内部通信,9093端口;

- 第三组是外部呆板明文访问,9094端口,在现实利用时,localhost 必要改为当前呆板的IP;

假如为单节点 Kafka,答应外部呆板访问,则只必要配置KAFKA_CFG_LISTENERS、KAFKA_CFG_ADVERTISED_LISTENERS就可以了。

LAINTEXT,EXTERNAL

LAINTEXT,EXTERNAL LAINTEXT,PLAINTEXT

LAINTEXT,PLAINTEXT LAINTEXT

LAINTEXT

| 欢迎光临 qidao123.com技术社区-IT企服评测·应用市场 (https://dis.qidao123.com/) | Powered by Discuz! X3.5 |